Munawar Hafiz, CEO of OpenRefactory, writes this blog post.

- ChatGPT’s bug finding capability is limited to small code snippets, less than a hundred lines of code.

- When it finds bugs correctly, its explanation of the bug is better than all other static analysis tools in the market.

- ChatGPT makes mistakes in detecting bugs and suggesting fixes.

- The answer given by ChatGPT appears to be dependent on the question being asked. Therefore, it can be misled into giving wrong answers.

- Because of the very convincing explanation, the mistakes made by ChatGPT may create more confusion for an inexperienced programmer who has no way of determining whether the answers are correct or not.

- OpenRefactory’s Intelligent Code Repair (iCR) integrates with Large Language Models (LLM) such as ChatGPT to find bugs that other tools miss, find bugs with low false warnings, and provide better fixes for complex problems. iCR for Java 4.0, iCR for Python 4.0 and iCR for Go 2.0 will have these integrations.

Software Bug Detection With Large Language Models

Large Language Models emerged only recently (around 2018) but these have taken the world by storm. This is because these models appear to perform very well at a wide variety of tasks. So, the same model that creates a recipe for a chef is able to generate the code for a web site for a web developer.

One of my colleagues who is the CEO of a static application security testing (SAST) company commented a couple of years ago, “This may be the technology that will drive all of us in the static analysis tool industry out of business.” So, when GPT 3.5 aka ChatGPT came out last year, naturally we approached it with caution.

We wanted to better understand the bug detection capability of ChatGPT. The first question we asked is can it be used as a drop-down replacement of existing static analysis tools. Then we looked more deeply into its capabilities.

Finding Bugs In Software Projects

Most of the static analysis tools operate by getting the source code repository as input and then producing the results. Can this be done with ChatGPT?

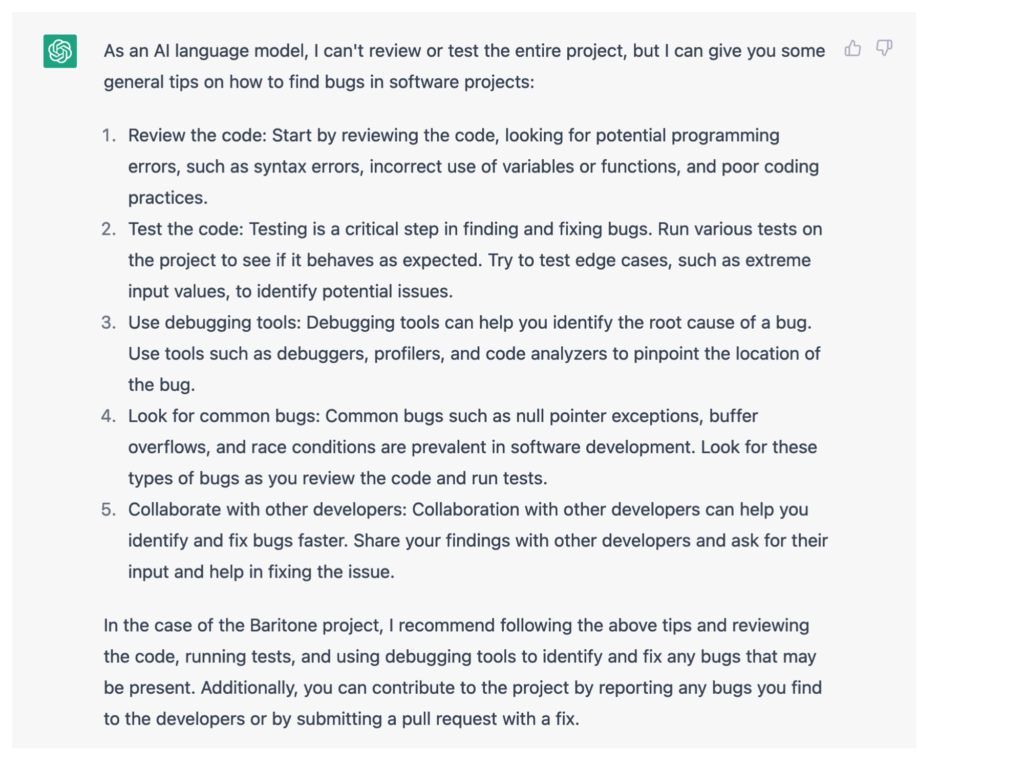

We asked ChatGPT the following question.

“Can you find bugs in the following project: <Sample GitHub URL>?”

We got the following reply:

It is trying to be supportive, but that is not helpful.

So we stripped the code of a small project into one file, copied it and gave it to ChatGPT and asked it to find bugs. The code was about 1000 lines. Despite our efforts to reduce the scope of the request, ChatGPT was not able to process the input since it was beyond its maximum size limit.

Real programs are much much larger than that.

Observations

- ChatGPT is not able to scan entire projects and detect bugs like other SAST tools.

- It is perhaps only applicable for small code snippets, less than 100 lines of code. We’ll now look at that in more depth.

Finding Bugs In Small Code Snippets

We tried out the bug detection capability of ChatGPT by feeding it some small programs and asking questions about possible bugs in the code. We used some test programs that are used in the Intelligent Code Repair (iCR) codebase. These are all less than 100 lines of code.

For some, the results were very accurate. We presented a few sample programs with null dereference issues and ChatGPT found the bugs with ease. Most impressive was the detailed explanation that it gave. iCR or any other SAST tools in the market cannot even come close to the clarity of the explanation in natural language. It was as if a human code reviewer had found the bug and is now explaining the bug while sitting beside me. Quite an unbelievable experience!

Then we tried some dataflow related issues such as OS Command Injection and SQL Injection issues. These were also found along with excellent explanations.

However, as we continued to experiment with the small programs, several issues arose. Most importantly, even for the small programs, ChatGPT made mistakes. It generated false warnings. It generated fixes that were wrong. The next two sections describe a couple of examples.

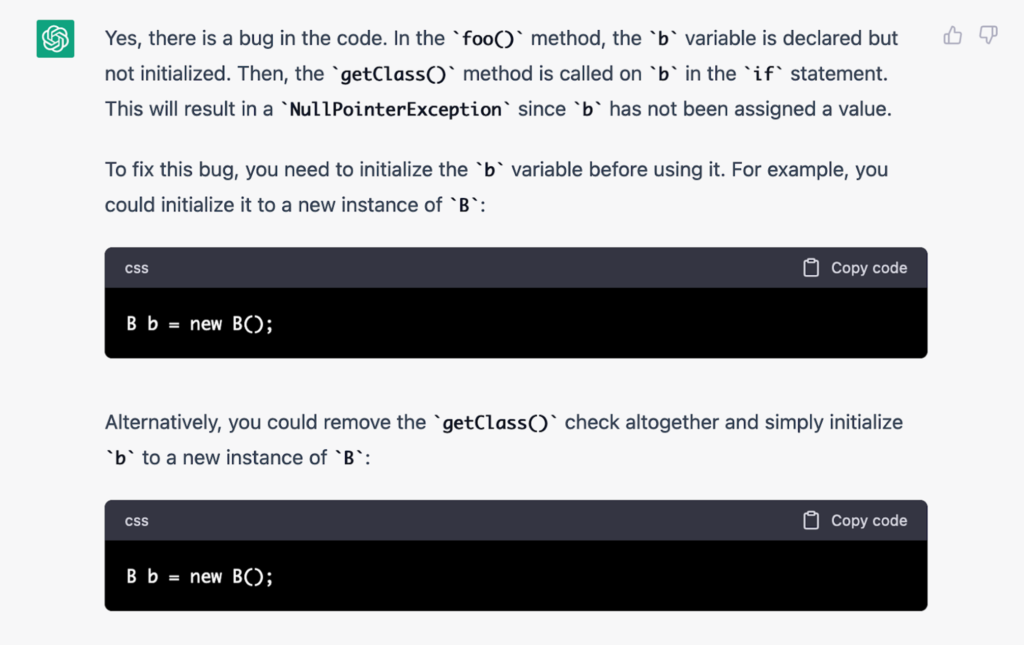

Null Dereference Issue Detected Correctly But The Fix Is Incorrect

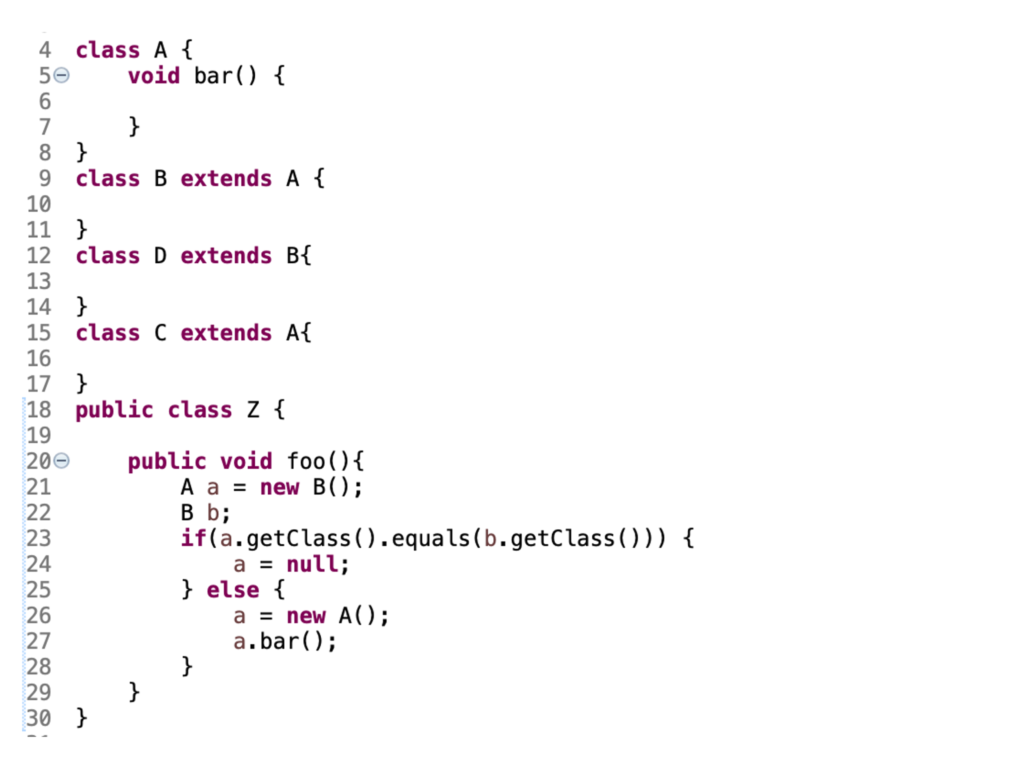

Here is a sample Java test program.

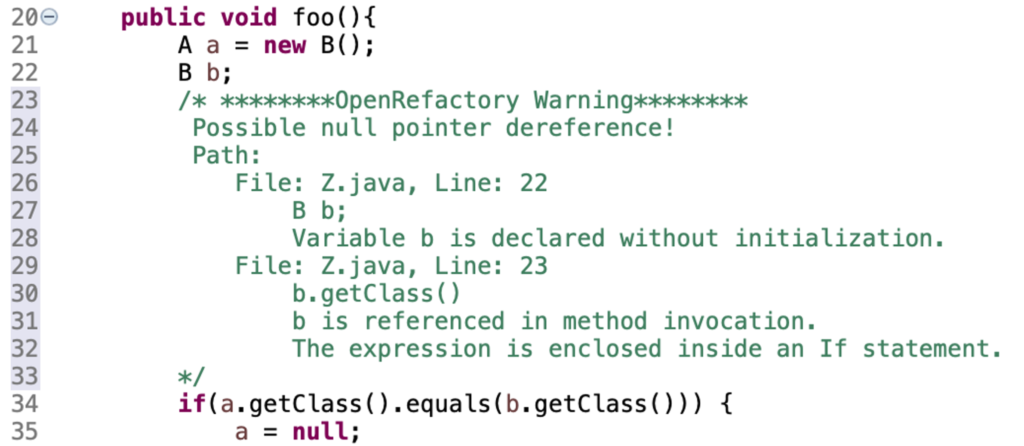

The null pointer dereference is pretty obvious in this case since the variable b is declared locally in line 22, but it was never initialized. Therefore there is a null pointer dereference in line 23.

iCR identifies the issue and generates this warning message.

Observations

- The bug detection was accurate, the explanation was spot on.

- The fix suggestion is perhaps better than what automated program repair (APR) tools would suggest.

- The fixes were multi-line changes that alter program flow, i.e., pretty aggressive.

- The fixes, specifically the aggressive ones, are not always accurate.

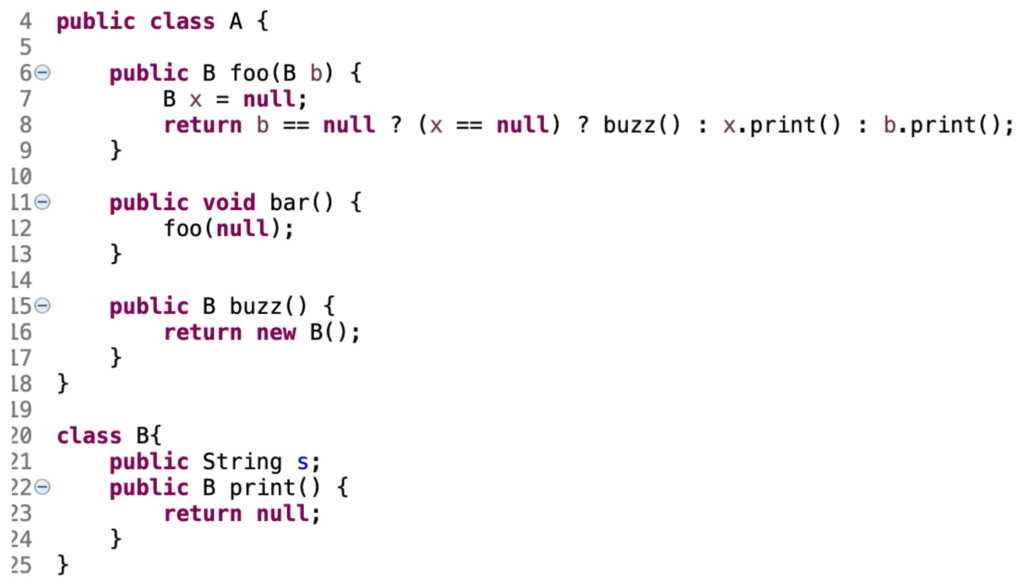

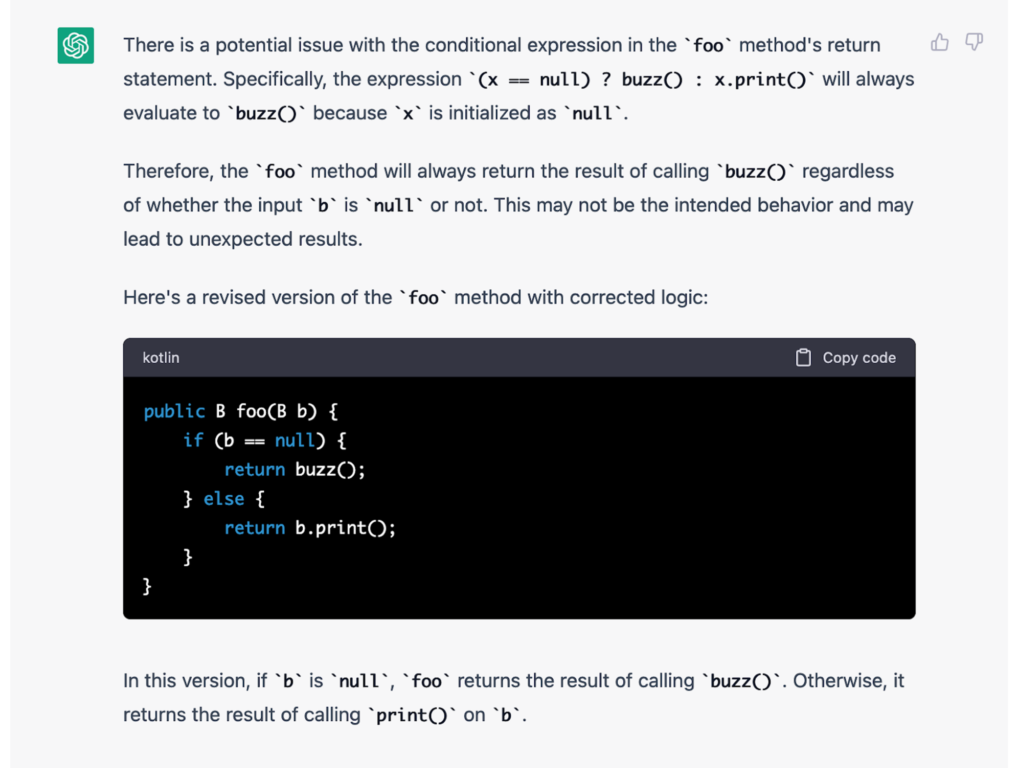

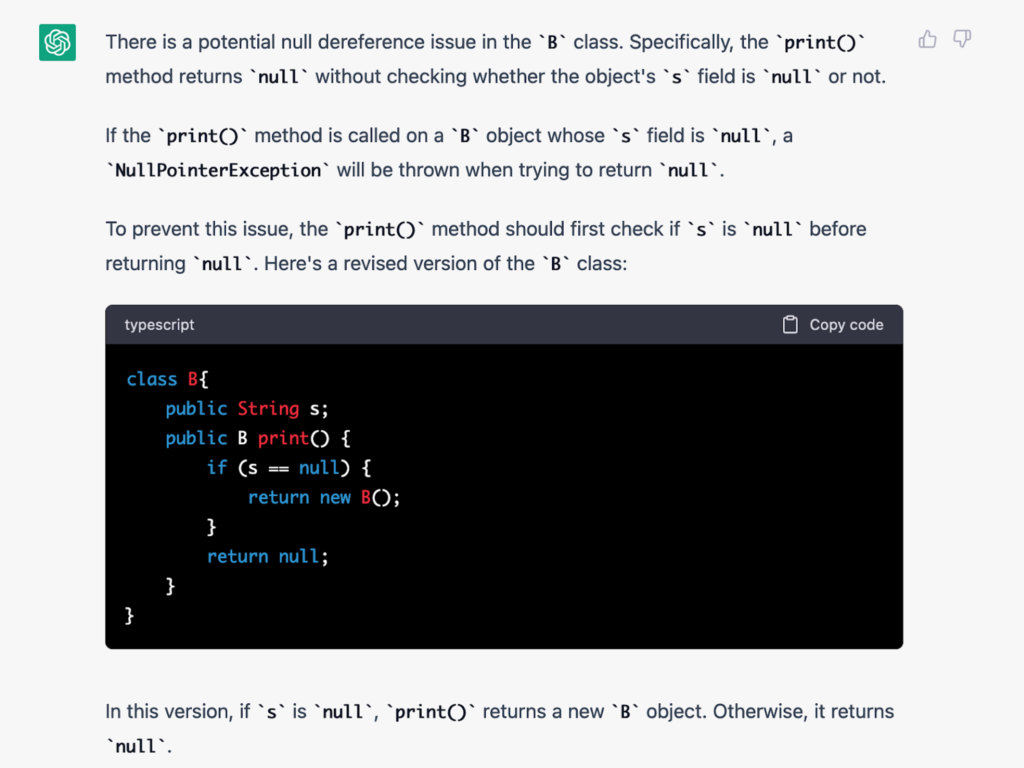

ChatGPT Tricked Into Giving a False Warning

Consider the following Java program.

So, we tried to probe if there is a null pointer dereference. We asked the following question.

“Is there a null dereference bug in the following code?”

This is what we got:

We have apparently been able to mislead ChatGPTt. Here the explanation is equally clear, but it is totally wrong. It talks about the print method returning null, but that should not be the case.

Observations

- Even for small programs, ChatGPT gives confusing results.

- ChatGPT can be misled to give wrong answers.

- Because ChatGPT is based on natural language, it may (surprisingly) miss syntactic sugars used in code.

Summary

See the TL;DR at the start.

How can ChatGPT be used for detecting bugs in real code? This is where we need to align the strengths of static analysis tools and the large language models and make an innovative product in the market. OpenRefactory is doing exactly that.

OpenRefactory’s iCR for Python release version 2.0 already contains some fixers that are based on large language models. We are integrating even more LLM support in iCR for Java v4.0, iCR for Python v4.0 and iCR for Go v2.0.

Epilogue

- We plan to write a series of articles outlining the strengths and weaknesses of ChatGPT. Stay tuned.

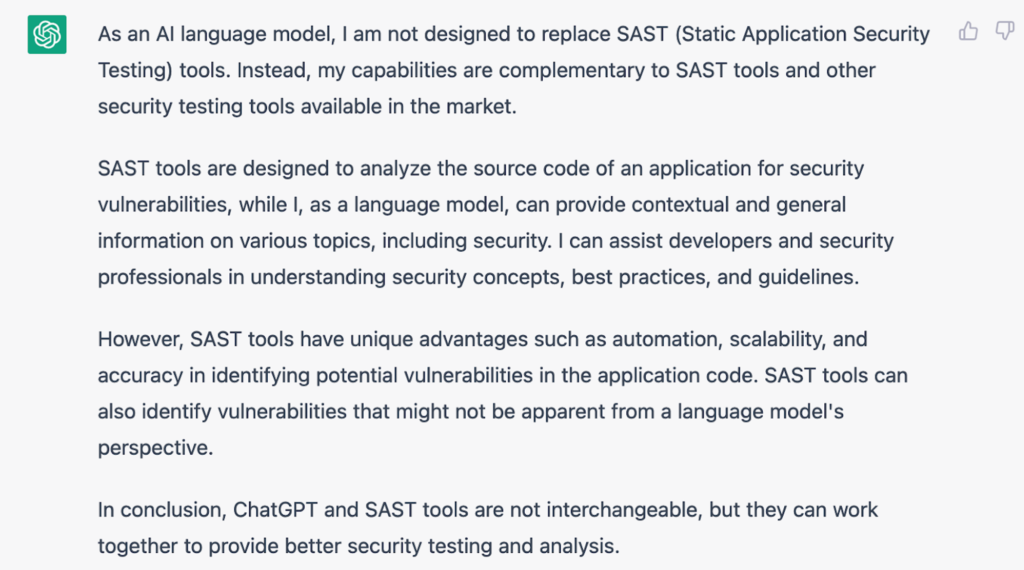

- We asked ChatGPT if it will be a competitor in the SAST space.

“Is ChatGPT going to replace SAST tool?”

It was very polite in its response:

- We plan to take ChatGPT up on its offer!